Hierarchical policies that combine language and

low-level control have been shown to perform impressively long-horizon robotic tasks, by leveraging either zero-shot high-level

planners like pretrained language and vision-language models

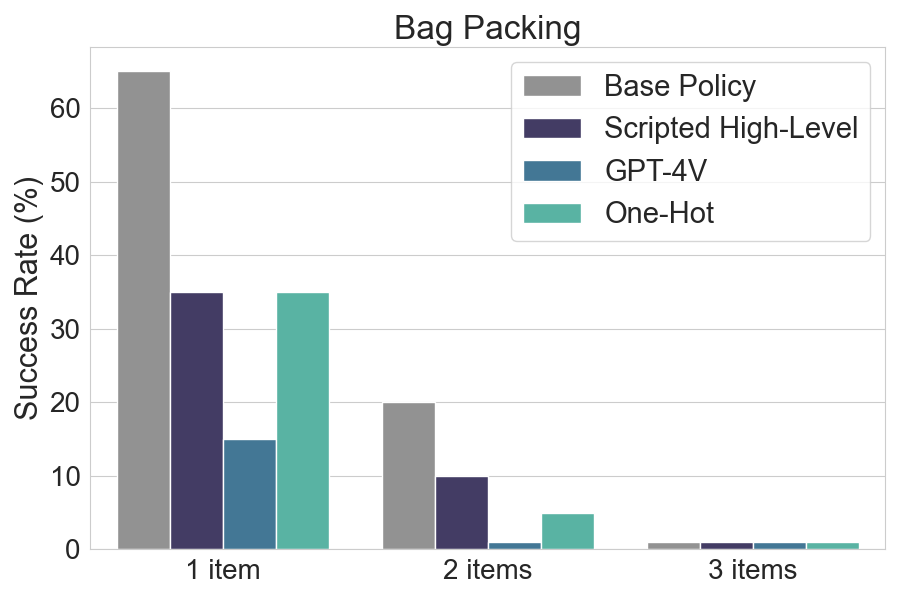

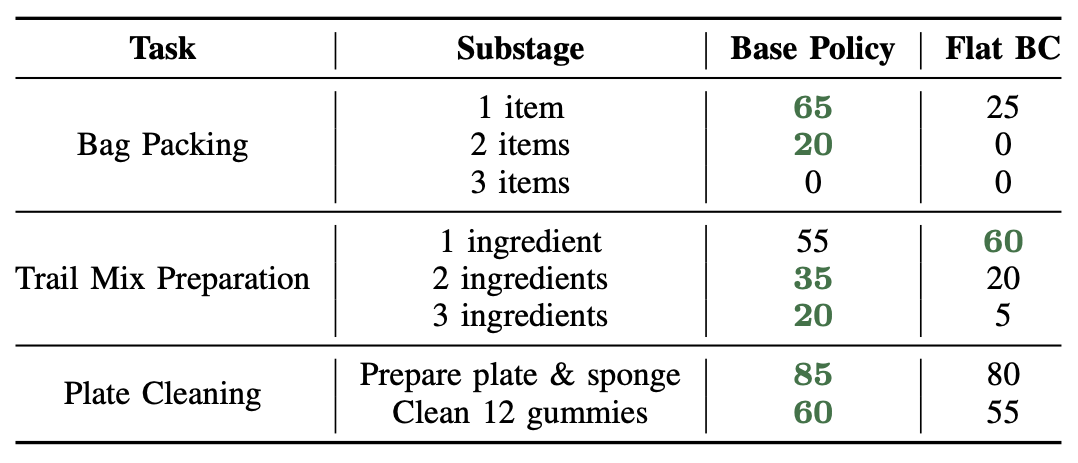

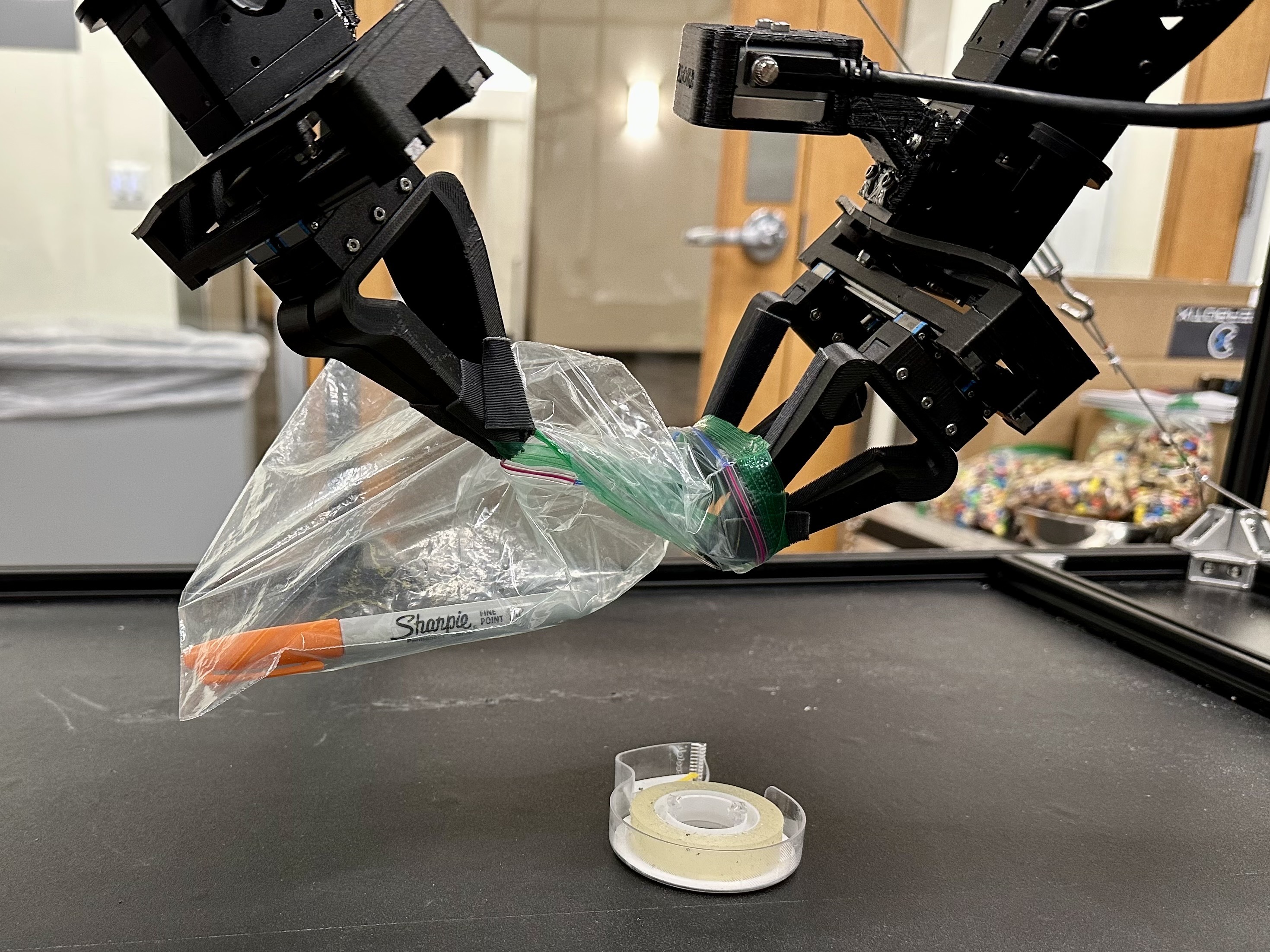

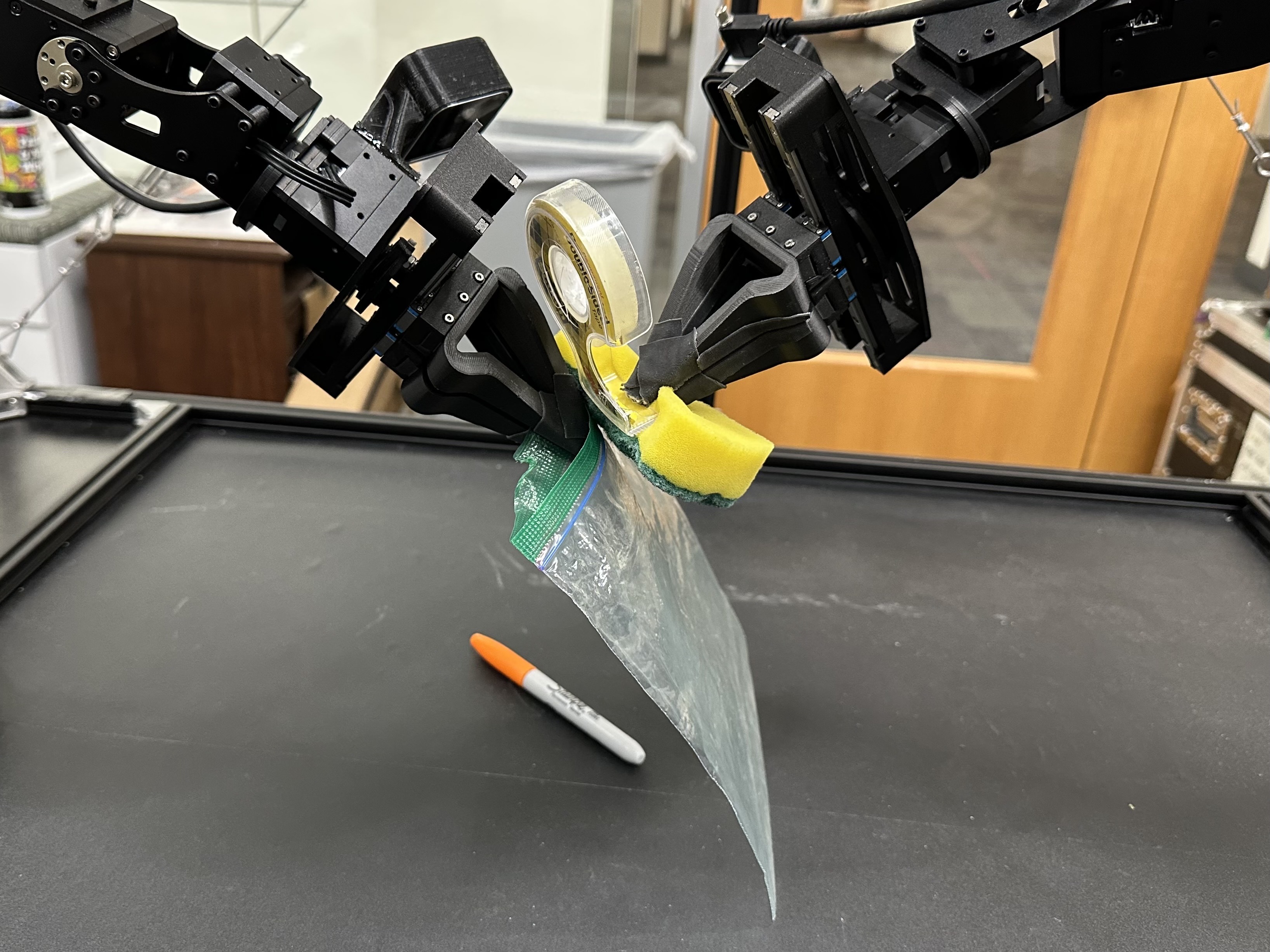

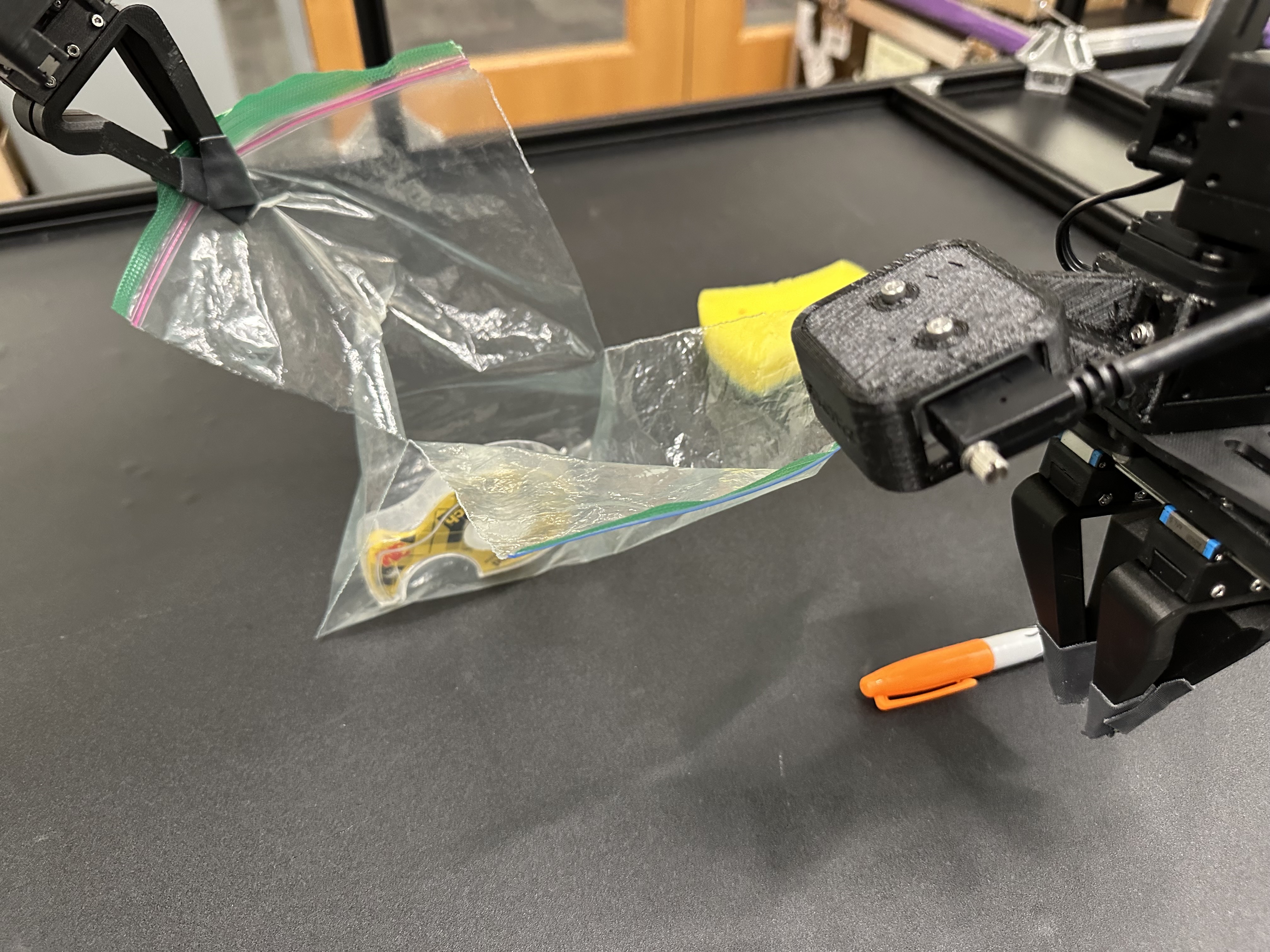

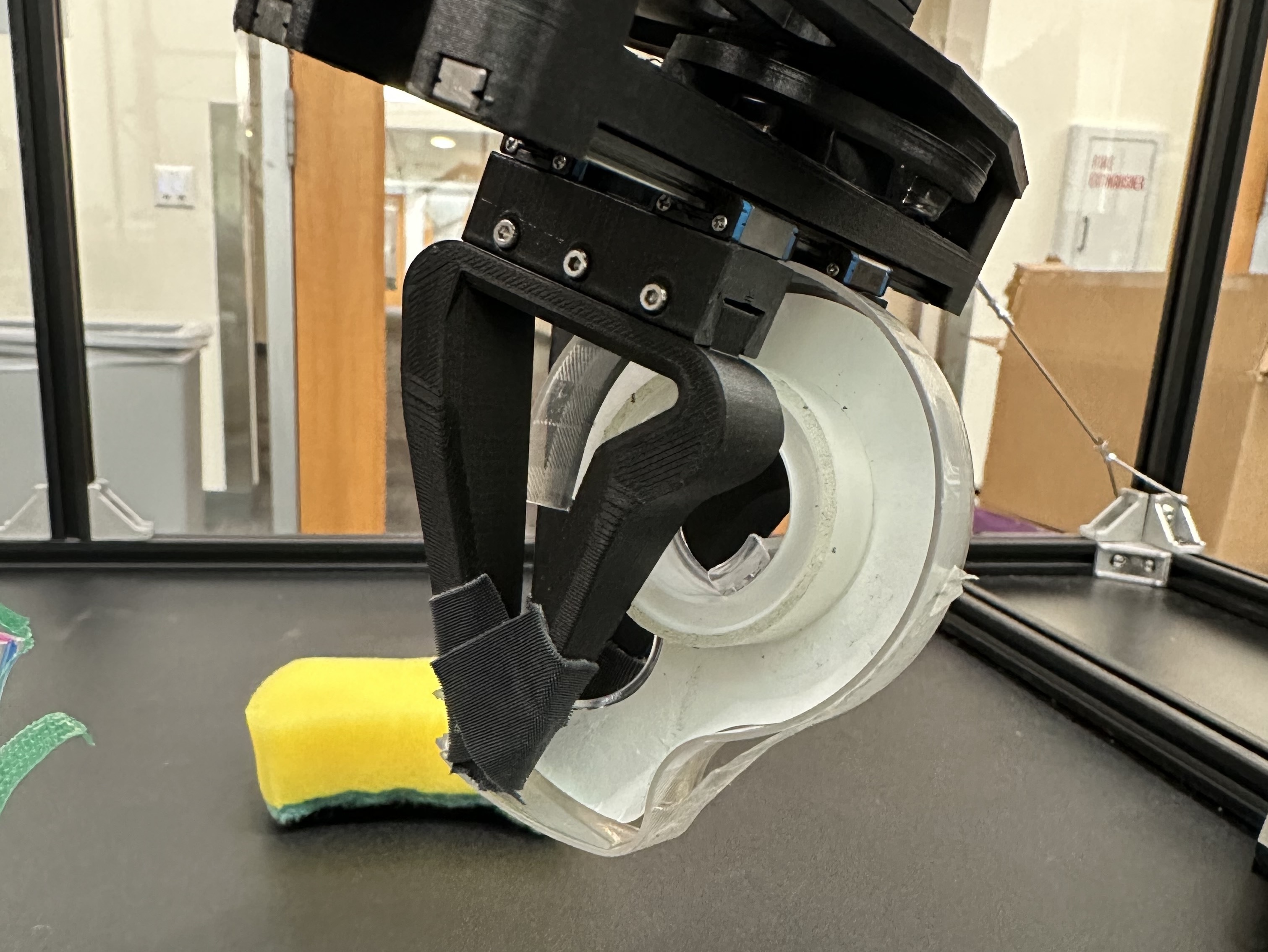

(LLMs/VLMs) or models trained on annotated robotic demonstrations. However, for complex and dexterous skills, attaining

high success rates on long-horizon tasks still represents a major

challenge -- the longer the task is, the more likely it is that

some stage will fail.

Can humans help the robot to continuously improve its long-horizon task performance through intuitive and natural feedback?

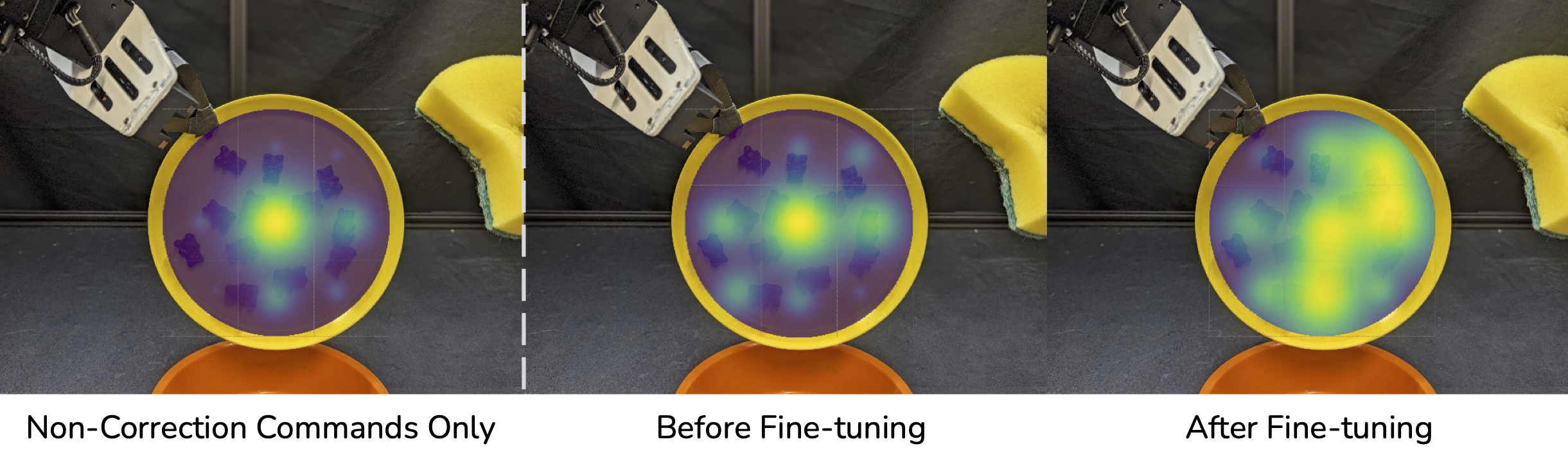

In this work, we make the following observation: high-level policies that index into sufficiently rich and expressive low-level language-conditioned skills can be readily supervised with human feedback in the form of language corrections.

We show that even fine-grained corrections, such as small movements (“move a bit to the left”), can be effectively incorporated into high level policies, and that such corrections can be readily obtained from humans observing the robot and making occasional suggestions.

This framework enables robots not only to rapidly adapt to real-time language feedback, but also incorporate this feedback into an iterative training scheme that improves the high-level policy's ability to correct errors in both low-level execution and high-level decision-making purely from verbal feedback.

Our evaluation on real hardware shows that this leads to significant performance improvement in long-horizon, dexterous manipulation tasks without the need for any additional teleoperation.